|

|

本帖最后由 hoshea 于 2025-7-4 10:36 编辑

受论坛大神对frigate的经验启发,打算将门口摄像头接入到frigate并增加人脸识别功能,最终实现MQTT接入HA中。

目前实现人脸识别的方法有:

1.基于 Frigate 使用 Double Take 和 DeepStack 对视频监控进行人脸识别

2.frigate 0.16 beta3 版本已加入对人脸识别的支持。

1.本文将用到:

N150小主机一台

飞牛OS 0.9.12 Linux内核 6.12

创米双摄Q2 这款摄像头支持接入米家+RTSP/ONVIF推流+PTZ控制

人脸识别使用deepstack + double take

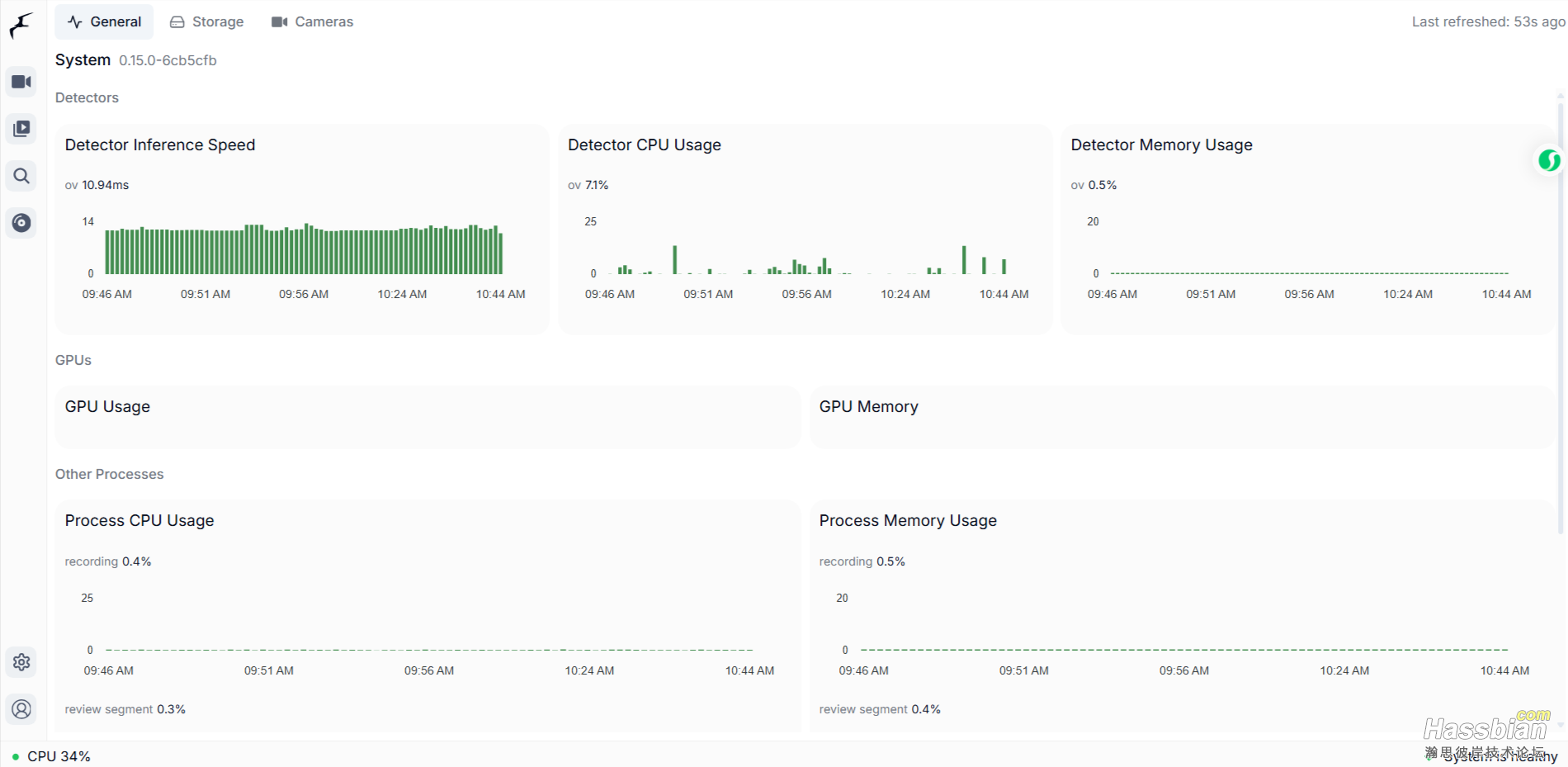

1.使用frigate监控 N150的CPU开销30%,监测速度在10ms,实际体验不会延迟很多。

2.此处无GPU监控是因为开了SR-IOV,在0.16版本才支持。

1.MQTT部署就不细说了,网上教程都很详细。

2.部署frigate

使用飞牛OS应用中心安装frigate应用,飞牛中的版本为0.14,N150需要0.15以上的版本,需要部署后修改Docker Compose文件

services:

frigate:

container_name: frigate

privileged: true # this may not be necessary for all setups

restart: unless-stopped

cap_add:

- CAP_PERFMON

image: ghcr.io/blakeblackshear/frigate:0.15.1

shm_size: "512mb" # update for your cameras based on calculation above

devices:

- /dev/dri/renderD128:/dev/dri/renderD128 # For intel hwaccel, needs to be updated for your hardware

volumes:

- /etc/localtime:/etc/localtime:ro

- /var/apps/docker-frigate/shares/config:/config

- /var/apps/docker-frigate/shares/storage:/media/frigate

- type: tmpfs # Optional: 1GB of memory, reduces SSD/SD Card wear

target: /tmp/cache

tmpfs:

size: 1000000000

ports:

- "15000:5000"

- "8554:8554" # RTSP feeds

- "8555:8555/tcp" # WebRTC over tcp

- "8555:8555/udp" # WebRTC over udp

environment:

FRIGATE_RTSP_PASSWORD: "${wizard_password}"

然后在frigate开启后在Config Editor中输以上配置,重启。建议先按照官方步骤,确认摄像头接入无误后再添加其他内容。参考文档:https://docs.frigate-cn.video/

ui:

timezone: Asia/Shanghai

mqtt: #如果需要联动HA,需要安装mqtt服务器,文末有介绍

enabled: true

host: 192.168.123.237

user: frigate

password: frigate

go2rtc:

streams:

# camera2:

# - onvif://admin:[email protected]:8080

camera:

- rtsp://admin:[email protected]:555/main_stream1 #枪机

ffmpeg:

hwaccel_args: preset-intel-qsv-h265

detectors:

ov: #intel GPU推理使用

type: openvino

device: GPU

model: ###openvino使用,此模型容器自带

width: 300

height: 300

input_tensor: nhwc

input_pixel_format: bgr

path: /openvino-model/ssdlite_mobilenet_v2.xml

labelmap_path: /openvino-model/coco_91cl_bkgr.txt

cameras:

# camera2: # <------ Name the camera

# enabled: true

# ffmpeg:

# output_args:

# record: preset-record-generic

# inputs:

# - path: rtsp://127.0.0.1:8554/camera2

# input_args: preset-rtsp-restream-low-latency

# onvif:

# host: 192.168.123.135

# port: 8080

# user: admin

# password: HosheaXu2025

camera: # <------ Name the camera

enabled: true

ffmpeg:

output_args:

record: preset-record-generic-audio-copy

inputs:

- path: rtsp://127.0.0.1:8554/camera

input_args: preset-rtsp-restream-low-latency

roles:

- detect

- record

detect:

enabled: true

width: 1280

height: 720

fps: 10

annotation_offset: -1000

objects:

track: #声明可用于跟踪的对象

- person

- dog

mask:

- 0.001,0,0.26,0.002,0.236,0.152,0.08,0.215,0.105,0.588,0.113,0.776,0.159,0.78,0.221,0.986,0.003,0.989

- 0.993,0.004,0.562,0.005,0.404,0.007,0.549,0.392,0.71,0.612,0.907,0.89,0.994,0.935

zones: #zone不使用代码配置,但也可以事先设定一个不符合实际的,方便添加一些不可以在web添加的参数,zone区域之后在web修改

camera-person: #区域名称,自定义

coordinates:

0.119,0.595,0.234,0.966,0.876,0.922,0.539,0.412,0.389,0.02,0.292,0.052,0.357,0.481,0.258,0.536,0.244,0.421,0.105,0.486 #此为区域坐标,需要在web修改,这里预设了一些不符实际的,只是方便添加参数

loitering_time: 0

objects: person #只跟踪人

inertia: 3

#filters: #filters过滤参数,默认参数对检测躺着的人不准确,可以按需修改如下的ratio比例

#person:

#min_ratio: 0.3

#max_ratio: 3.0

motion: #motion不使用代码配置,但也可以事先设定一个不符合实际的,之后在web修改

threshold: 30

contour_area: 30

improve_contrast: true

review: #回放选项

alerts: #告警片段仅记录特定区域中的特定对象

required_zones:

- camera-person

labels:

- person

snapshots: #快照仅记录特定区域

required_zones:

- camera-person

record:

enabled: true

retain:

days: 14

mode: all #此为14天全时录制

alerts:

retain:

days: 7

mode: motion #带运动的片段保留7天

version: 0.15-1

3.部署Doubletake Doubletake支持多种人脸识别,Deepstack简单部署即可上手,识别精度需要通过训练调优。以下教程指引出处:Doubletake部署

services:

double-take:

container_name: double-take

image: skrashevich/double-take

restart: unless-stopped

volumes:

- ./data:/.storage

ports:

- 3000:3000

4.部署deepstack

services:

deepstack:

image: deepquestai/deepstack

container_name: deepstack

restart: unless-stopped

environment:

- VISION-FACE=True

volumes:

- ./data:/datastore

ports:

- "5002:5000"

5.doubletake中配置config

mqtt:

host: 192.168.123.xxx

user: xxx

password: xxx

topics:

frigate: frigate/events

matches: double-take/matches

cameras: double-take/cameras

detect:

match:

save: true

# 最低置信度

confidence: 60

# 保留时间,单位是小时

purge: 168

unknown:

save: true # 保留未识别的

frigate:

url: http://192.168.123.xxx:xxx #填写frigate地址

# 识别对象

labels:

- person

# 使用 deepstack 识别

detectors:

deepstack:

url: http://192.168.123.xxx:5002

timeout: 15

最后无问题的话在MQTT WEB中查看doubletake给MQTT发的信息中包含double-take/matches 主题的就是成功了,再通过HA中的MQTT的自动发现,就能接入了。

本文参考:

万字经验,一文带你入门frigate AI监控

https://bbs.hassbian.com/thread-29310-1-1.html

基于 Frigate 使用 Double Take 和 DeepStack 对视频监控进行人脸识别

|

|